Imagine you're at the helm of a thriving e-commerce business. Your website's buzzing with traffic, sales are skyrocketing, but there's a snag — You've got heaps of data scattered across multiple platforms, and it's holding you back from making those data-driven decisions that could propel your business forward. That's where building an ETL pipeline comes into play.

You've likely heard that ETL—Extract, Transform, Load—is the backbone of data integration. By streamlining your data processes, an ETL pipeline can transform your business into a data-savvy powerhouse. It's like assembling a dream team where each player knows their role, ensuring that data flows seamlessly from its source to your fingertips, ready for action.

Crafting an ETL pipeline isn't just for the tech giants; it's for businesses like yours that are ready to unlock the potential of their data. Let's jump into how you can build an ETL pipeline that turns your data chaos into a strategic asset.

Understanding ETL Pipeline

When you're looking to harness the power of data, understanding the backbone of any data operation is key. Let's break down the ETL pipeline.

Extract is the first step of the process, where data is gathered from various sources. Whether it's structured data from SQL databases or unstructured data from logs and social media, the extraction must be precise to ensure data quality.

Transform - the raw data isn't always in a state ready for analysis. Here, you handle tasks such as:

- Cleaning data to remove inaccuracies or duplicates

- Converting data into a format suitable for analysis

- Enriching data by combining it with other sources

This step is crucial for accurate, reliable insights.

The final stage, Load, puts your processed data into a target system, like a data warehouse, to be used for business intelligence and analytics. Depending on your needs, you may opt for a full load or an incremental load to keep your data up to date.

What makes ETL pivotal for business analytics is its capacity to consolidate disparate data, providing a cohesive view across the organization. Why is this cohesion so important? Simply put, it enables better decision-making by breaking down data silos.

Consider a tool like Apache NiFi, which simplifies the automation of data flow and can ease the ETL process.

By building a tailored ETL pipeline, you can streamline operations, minimize errors, and ensure timely access to critical data for analysis. Remember, an efficient ETL is not a luxury—it's a necessity in today's data-centric world.

Benefits of Building an ETL Pipeline

When diving into the arena of data management, creating an ETL pipeline is a strategic move that can elevate your business's efficiency. An ETL pipeline automates the flow of data and ensures that your data is not only accurate but also accessible when and where you need it most.

One significant benefit of an ETL pipeline is the reduction in manual errors. With less human intervention, your data remains pristine, leading to more reliable analytics. Consider the impact of clean data on your business decisions; it's indispensable. Tools like Apache NiFi take center stage in automating data flows, which can be further explored in their comprehensive documentation.

Another crucial advantage lies in the ability to handle large volumes of data. As your business grows, so does the amount of data you must manage. An ETL pipeline is built to scale, adjusting to data surges without compromise.

Also, you'll find that integrating an ETL pipeline can lead to significant time savings. Data processing tasks that once took hours, if not days, are streamlined into a process that's both swift and continuous. This allows your team to focus on strategic tasks that propel your business forward.

With the transformation stage of your ETL pipeline, you unlock the potential to enrich your data, enhancing its value far beyond its original state. SQL Documentation offers insight into elaborate data transformation techniques.

If you're considering the tools and processes that can best support your ETL pipeline, remember to evaluate them closely. Look for features like:

- Data integration from diverse sources

- Real-time data processing capabilities

- Scalability to adapt to data volume and complexity

- Robust error handling and recovery mechanisms

Here are some other advantages of implementing an ETL pipeline:

By incorporating these attributes, you're setting the stage for a solution that's not just functional today but will evolve with your data needs over time.

Planning Your ETL Pipeline

As you begin on the journey of building an ETL pipeline, it's critical to strategize and plan ahead. Approaching the process methodically will save you time and resources while ensuring your pipeline is efficient and scalable.

Assessing Data Sources and Quality

The foundation of your ETL pipeline lies in thoroughly understanding your data sources. You'll need to:

- Identify where your data originates

- Determine the formats of your incoming data

- Evaluate the quality and accuracy of the data

- Understand the refresh rate or how often your data sources are updated

Sound knowledge of your data sources can prevent issues during extraction and help anticipate the transformation necessary before loading.

Designing the Transformation Process

The transformation stage is where your data gets shaped and enhanced. Here's where to focus:

- Outline the data cleaning steps required

- Define the conversion rules for standardizing data formats

- Plan how to enrich your data to add value

- Decide on validation checks to ensure data integrity

This design will be a blueprint for the rules and processes that your ETL pipeline must follow.

Mapping Out Load Strategies

Deciding on the right load strategy is crucial for performance and accessibility:

- Choose between a full load or incremental load approach

- Determine the target system, whether it’s a data warehouse, database, or other storage solution

- Plan for potential scalability as datasets grow

By considering these aspects, you'll ensure that your data is not only correctly moved but also optimally stored.

Balancing Performance and Costs

Balancing the trade-off between performance and cost can define the success of your ETL pipeline:

- Assess the computing resources needed for processing

- Anticipate the cost implications of data storage and transfer

- Optimize the process to align with your budget without compromising on performance

Finally, don't forget to document your ETL pipeline design extensively. This documentation is pivotal for future maintenance and scaling efforts and should include the rationale behind each decision made during the planning phase.

Extracting Data from Multiple Platforms

When you're building an ETL pipeline, extracting data is the first hurdle. You'll encounter numerous sources, each with its unique format and access protocols. You must grapple with databases, cloud storage, APIs, and various file formats. This complexity necessitates robust, adaptable tools capable of interfacing with these diverse platforms.

Apache NiFi, for example, shines in this area. Its system connects seamlessly to multiple data streams, pulling information efficiently from different systems. Whether you're dealing with real-time data flowing through Apache Kafka or pulling records from a traditional SQL database, NiFi's processors streamline the extraction process.

Consider, too, the structure of the data. You may need to gather JSON objects from web services, CSV files from legacy systems, or unstructured data from social media platforms. Each format challenges your pipeline's flexibility and highlights the need for comprehensive understanding. For accurate and efficient data extraction, familiarize yourself with JSON syntax and how relational databases work.

Extraction is a critical phase that lays the groundwork for subsequent transformation and loading. Below are essential considerations:

- Consistency in Data Collection: Ensure your extraction methods result in consistent and reliable datasets.

- Efficiency Matters: Minimize the system's resource consumption during extraction to avoid hampering overall performance.

- Security Is Key: Never compromise on security protocols while accessing sensitive data sources.

As your business grows, the data extraction needs will expand. Your chosen tools should scale without significant restructuring efforts. Keep in mind that in extracting data, what works today may not suffice tomorrow. Always keep an eye on evolving data trends and software updates to keep your ETL pipeline effective and future-proof.

Transforming and Cleaning Data

When you've successfully extracted data from its sources, it's time to tackle the transformation phase of your ETL pipeline. Data transformation is the process of converting raw data into a format that's more useful for analysis. This step ensures that your data is accurate, consistent, and ready for complex queries.

In data transformation, you'll deal with a multitude of tasks that can include:

- Normalization: Scaling numeric data to fit into a range to reduce bias.

- De-duplication: Eliminating duplicate records to enhance data quality.

- Data type conversion: Changing data types for compatibility with other systems.

- Data validation: Ensuring that the data meets certain quality standards before loading.

This stage often requires a robust set of tools. Tools such as Apache Spark provide powerful data processing capabilities that can handle these tasks efficiently, while tools like Pandas in Python are great for more intricate data manipulation tasks.

Cleaning data is another critical aspect of this phase. You'll often encounter incomplete, incorrect, or irrelevant parts of data that need to be addressed. Data cleaning may involve:

- Filling missing values: Perhaps with median or mean values or by using a predictive model.

- Identifying outliers: This may include statistical methods to find anomalies.

- Correcting errors: Like syntax or spelling mistakes which could skew analysis results.

A successful cleaning process will significantly improve your overall data quality and reliability. Employing tools such as OpenRefine can greatly simplify the cleaning process with its user-friendly interface.

By diligently transforming and cleaning your data, you're setting up your ETL pipeline for more efficient operations and more insightful analyses. Equipping yourself with these skills and tools ensures that you can effectively manage and prepare your data for the complex demands of today’s data-driven world.

Loading Data into Destination

After the rigorous transformation process, you're now ready to move into the load phase, where data finds its new home for analysis and business intelligence. This stage is crucial because efficiency and integrity are paramount as data moves into your data warehouse or database.

When loading data, there are generally two approaches: full loading and incremental loading. Full loading involves wiping the slate clean and reloading the entirety of data each time. It's simple but can be resource-intensive. In contrast, incremental loading updates the destination with only the changes since the last load, greatly reducing the load time and resource usage.

To maintain data integrity, ensure checks and balances are in place. This includes verifying that the data loaded matches the source data in terms of count and content. Also, employ error-handling strategies to capture any discrepancies during the load process.

As you load your data, consider the destination system’s performance. Large volumes of data can impact system operations; hence, it’s recommended to schedule loads during off-peak hours. To make this task seamless, you might use scheduling tools like Apache Airflow, which not only allows you to automate your ETL workflows but also provides advanced monitoring capabilities.

The role of Real-Time Processing has become increasingly important in recent years. Systems such as Apache Kafka can help stream data into your store, enabling real-time analytics and decision-making.

Here's how you might integrate Kafka with your ETL pipeline:

from kafka import KafkaProducer

# Initialize Kafka producer

producer = KafkaProducer(bootstrap_servers='localhost:9092')

# Send data to destination topic

producer.send('destination_topic', b'Your transformed data')

Understand that as the data world becomes more complex, mastering the load process is essential for maintaining a competitive edge. Tools and methods will continue to evolve, but the principles of efficiency, reliability, and integrity remain constant.

Automation and Monitoring of ETL Pipeline

When you're building an ETL pipeline, automation is key. It's what will save you from the tedious, error-prone task of manually processing data. With the right tools, you can automate the execution of your ETL tasks, ensuring consistency and reliability in your data processes.

One such tool that stands out is Apache Airflow, which allows you to programmatically author, schedule, and monitor workflows. Automating your ETL pipeline not only enhances efficiency but also helps you scale your data operations effectively.

Effective monitoring compliments automation by offering insights into the ETL process, helping you quickly identify and address any issues that arise. Monitoring tools track the health, performance, and reliability of ETL processes. They provide alerts and detailed reports on failures, delays, or any anomalies detected in the data flow. This real-time monitoring capability is vital for maintaining data quality and pipeline performance.

Remember, as your data sources and volumes grow, the complexity of your ETL pipeline will increase. Automation and monitoring become non-negotiable, ensuring that your ETL pipeline can handle the scale without compromising on quality. Data professionals often use Incident Management Systems like PagerDuty or VictorOps to keep on top of any issues that monitoring tools pick up.

Besides, a robust ETL pipeline requires a logging mechanism to capture detailed information about every operation performed within the ETL process. Logs play a crucial role in diagnosing problems and optimizing the pipeline for better performance.

Best Practices for Building ETL Pipeline

Recognizing the burgeoning need for efficient data handling, adhering to best practices when constructing your ETL pipeline is pivotal. It's not just about moving data; it's about ensuring that it's done with precision and foresight.

Start with a Clear Data Model: Before you jump into the construction of your ETL pipeline, it’s essential to have a well-defined data model. Understand the data you are dealing with and establish standardized structures to maintain consistency across all data sources.

Employ Scalable Tools: As your business grows, so too will your data processing needs. Use scalable tools such as Apache Spark, which can handle both batch and real-time processing, allowing for flexibility as data volumes increase.

Ensure Data Quality: From the outset, carry out processes to ensure data quality. Data validation, error tracking, and reconciliation should be part of your regular operations. Employ tools like OpenRefine for data cleaning and create validation checks within your pipeline to catch errors early.

Script Maintainable Code: Keep your code clean and maintainable. You’ll thank yourself later when updates or changes are needed. Comment your code generously, apply consistent naming conventions, and use version control systems like Git to track changes.

Optimized Transformation Logic: Your ETL pipeline's heart is in its ability to transform raw data into actionable insights. Strive for performance optimization in your transformation logic. By minimizing resource-intensive operations and caching intermediate results when necessary, you ensure smoother operations.

Incorporate Automation and Monitoring: Don't neglect the need for automation and monitoring within your ETL pipeline. With tools like Apache Airflow, you can automate pipeline tasks efficiently. Also, incorporate real-time monitoring tools to stay ahead of potential issues, using systems such as Prometheus and Grafana for comprehensive insights into your ETL pipeline's health.

Conclusion

Mastering the art of ETL pipeline construction sets you up for success in today's data-driven world. With the right approach and tools, you'll transform raw data into actionable insights, propelling your business forward. Remember to prioritize a clear data model, leverage scalable solutions, and maintain high data quality. By scripting maintainable code and optimizing your transformation logic, you ensure your pipeline remains robust and efficient. Don't forget to automate and monitor your processes for the best results. Equipped with these best practices, you're now ready to build an ETL pipeline that stands the test of time and complexity. Get started and watch your data strategy elevate to new heights.

Looking to do more with your data?

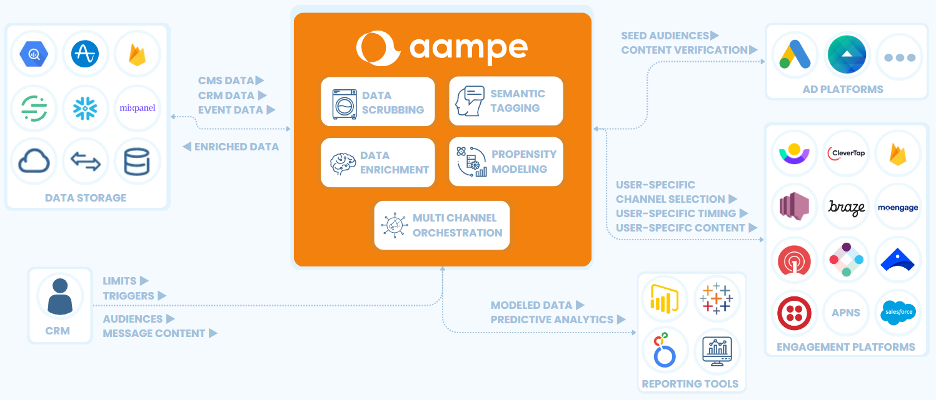

Aampe helps teams use their data more effectively, turning vast volumes of unstructured data into effective multi-channel user engagement strategies. Click the big orange button below to learn more!