Data pipeline architecture is crucial for organizations looking to streamline their data flow from its initial collection point to its final destination for analysis and usage. As data volume continues to grow exponentially, it becomes imperative to have an efficient means of transporting this data. This architecture not only helps in managing data but also in transforming and storing it effectively across various stages, which can include ingestion, processing, storage, and analysis.

Understanding the components and best practices of data pipeline architecture is essential whether you are a data scientist, an engineer, or an analytics professional. The evolution of this architecture has seen paradigms like ETL (Extract, Transform, Load), ELT (Extract, Load, Transform), and real-time streaming gain prominence. You must consider factors such as data sources, volume, velocity, and the specific needs of your business to determine the most suitable pipeline architecture.

Fundamental Concepts of Data Pipelines

When constructing a data pipeline, the central focus should be on how data moves from its source to its destination, a process that typically includes steps known as extract, transform, and load (ETL).

Defining Data Pipelines

A data pipeline is a set of processes that automate the flow of data from one point to another. Typically, data is extracted from one or more sources, transformed into a format suitable for analysis, and then loaded into a destination system for storage or further processing.

Data Pipeline Components

The components that make up a data pipeline include:

- Source: The origin point where data is collected, which could be databases, CRM systems, or real-time data streams.

- Destination: The endpoint, such as a data warehouse, where the processed data is stored.

- Extract: The process of retrieving data from the source.

- Transform: The application of functions to convert the extracted data into a usable format.

- Load: The final phase where transformed data is moved to the destination.

To illustrate, let's look at a simple table representing a basic ETL process:

Data Sources and Destinations

Data sources are the starting points in the journey of data flow, while destinations are the final stopovers where data is stored, analyzed, and made available for consumption.

Types of Data Sources

Your data pipelines will begin with data sources—these are the genesis where data is born or collected. Consider the various types of sources available:

- Databases: Traditional relational databases like MySQL or PostgreSQL, or NoSQL databases such as MongoDB.

- APIs: Sources delivering data in real-time or batch processes through application programming interfaces (APIs).

- Files: Flat files like CSV, JSON, or logs that contain data ready for ingestion.

- Cloud Storage: Services like Amazon S3 where data can reside in a highly available and scalable environment.

A well-architected data pipeline ensures compatibility with these diverse data origins.

Choosing the Right Destination

Deciding on the best destination for your data hinges on the intended use:

- Data Warehouses: Ideal for structured data requiring complex queries and analysis, like BigQuery or Snowflake.

- Data Lakes: Storage repositories like Amazon S3 that hold vast amounts of raw data in its native format.

- Real-time Analytics Platforms: Designed for immediate analysis and action, systems like Apache Kafka enable swift insight generation.

Choose your destination based on factors such as the volume of data, the need for scalability, and the type of analytics you plan to perform.

Data Pipeline Processes

Data pipeline processes are fundamental components that dictate how data is moved and transformed across systems within an organization. These processes are crucial for ensuring that data is ready for analysis, reporting, and making data-driven decisions.

Extraction, Transformation, and Loading (ETL)

Extraction is the first step in the ETL process and involves retrieving data from various sources. This can include databases, CRM systems, and more. The transformation stage follows, where the extracted data is cleaned, normalized, and enriched to conform to business rules and analytical needs. Finally, the Loading phase involves transferring the transformed data into a target data warehouse or database for further use.

Data Ingestion and Collection

Data ingestion refers to the process of obtaining and importing data for immediate use or storage in a database. Data collection is the systematic gathering of information from various sources for a specific purpose, and it is a subset of the larger ingestion process. This process ensures that data is available in a usable form for further processing and analysis activities.

Batch vs. Streaming Processing

Batch processing refers to the method where data is collected in groups or batches over a period of time before being processed. Usually, it is scheduled to run at specific intervals. In contrast, streaming processing deals with data in real-time, processing data as soon as it arrives.

Architecture and Design Principles

Building Scalable Architectures

Scalability is a cornerstone of effective data pipeline architecture. As your data volume grows, your system should have the capacity to handle increased load without compromising on performance. It's important to design your architecture so that it can scale out (horizontally) by adding more nodes or scale up (vertically) with more powerful machines as necessary. Additionally, utilizing principles of modularity—where components can be independently scaled—and distributed processing can significantly enhance the scalability of your data pipelines.

- Modularity: Ensures individual components can be scaled or improved without impacting the entire system.

- Distributed Processing: Facilitates data processing across multiple computing resources, improving scalability and fault tolerance.

Designing for Performance

To design for performance, focus on optimizing the data flow within the pipeline. This means selecting efficient storage solutions, minimizing data movement, and using caching to improve access times. It also involves using appropriate data formats and compression techniques to speed up data transfer and processing.

- Efficient Storage: Choose the right storage technology (like columnar databases for analytics) to optimize for read and write speeds.

- Data Formats and Compression: Use formats like Avro or Parquet that offer both compression and fast serialization/deserialization for big data workloads.

To ensure high performance, consider data management techniques that can help streamline and expedite the flow of data within your pipeline.

Data Storage and Computing Frameworks

Storing and processing data efficiently are fundamental components of data pipeline architecture. Your choice of storage and computation frameworks determines not only how data is housed but also how it's analyzed and utilized for decision-making.

Data Lakes and Data Warehouses

Data Lakes are vast pools of raw data stored in its native format until needed. Hadoop, an open-source framework, enables the distributed processing of large data sets across clusters of computers using simple programming models. A data lake, unlike traditional databases, is designed to handle a wide variety of data types, from structured to unstructured.

Data Warehouses, on the other hand, are centralized repositories where data is already filtered and structured. A prime example of a modern data warehouse solution is Databricks, which combines the power of data warehouses and data lakes, offering an analytics platform on top of a data lake architecture.

Examples of Storages:

- Data Lake:

- Use case: Big data processing, real-time analytics

- Example: Apache Hadoop

- Data Warehouse:

- Use case: Business intelligence, aggregated reporting

- Example: Databricks

Computing Frameworks and Tools

Apache Spark is a powerful, open-source processing engine designed particularly for large-scale data processing and analytics, providing high-level APIs in Java, Scala, Python, and R.

Here’s an example of using Spark for scripting and job orchestration:

This practical example demonstrates how you can initialize a session in Apache Spark, which is a stepping stone for performing data manipulation tasks in your data pipeline.

Computing Tools:

- Apache Spark:

- Strengths: Fast processing, in-memory computation, ease of use

- Databricks:

- Strengths: Unified platform, collaborative, built on top of Spark

Transformation and Processing Techniques

Data Transformation Methods

Data transformation encompasses a variety of methods tailored to reform raw data into a format suitable for analysis and reporting. ETL (Extract, Transform, Load) and

ELT (Extract, Load, Transform) are two primary approaches.

- ETL: Data is extracted from sources, transformed into the desired format, and then loaded into a data warehouse.

- Extraction: Data is pulled from the original source.

- Transformation: Data is cleansed, mapped, and converted.

- Loading: Data is deposited into a target database or warehouse.

- ELT: The loading phase precedes the transformation, often used in cloud-native data warehouses.

- Extraction: Data is retrieved from source systems.

- Loading: Data is initially loaded into a data lake or warehouse.

- Transformation: Data is transformed within the storage platform.

By employing these methods, you ensure that the data in your streaming data pipeline is of high quality and ready for complex analysis and business intelligence tasks.

Stream Processing and Real-Time Requirements

Stream processing involves the continuous ingestion, processing, and analysis of data as it's created, without the need to store it first.

- Kafka Streams, Apache Flink, and Spark Streaming are popular frameworks used for stream processing. They enable:

- Ingestion: Real-time collection of streaming data from various sources.

- Processing: Immediate computation, aggregation, or transformation of data as it flows through the system.

Here's a basic example of a real-time stream processing command using Apache Kafka:

Real-time processing ensures that decisions and actions are based on the freshest data, a non-negotiable in industries like finance and online services where millisecond-level differences matter. Through such processing, real-time data transformation ensures accurate and timely analytics critical for reactive business strategies.

Data Orchestration and Workflow Automation

Orchestration Tooling

Orchestration tooling refers to the systems and software that govern how different data processing tasks are executed in a coordinated manner. For your data to move seamlessly from source to destination, orchestration tools like Azure's pipeline orchestration provide the capacity for scheduling, monitoring, and managing workflows.

Automating Data Workflows

Automating data workflows is about minimizing manual interventions to ensure that the data is processed and moved efficiently. Technologies such as ELT (extract, transform, load) pipelines have revolutionized the way data is handled, catering to the demanding needs of big data analytics. The principle behind workflow automation is to streamline repetitive processes, therefore reducing the likelihood of errors and freeing up human resources for more complex tasks.

For instance, when automating an ELT pipeline, the following elements are automated:

- Extraction: Pulling data from the original source.

- Transformation: Converting data into a format suitable for analysis.

- Loading: Inserting data into the final target, such as a data warehouse.

Data Quality, Governance, and Compliance

In the realm of data management, data quality and governance are foundational to ensuring that information is accurate, reliable, and used in compliance with industry regulations. Such foundations guide organizations to instill robust practices for maintaining high-quality data across their pipelines.

Ensuring Data Quality

Data quality encompasses more than just the accuracy of data; it also refers to the reliability, relevance, consistency, and timeliness of the data within your systems. To ensure these qualities, your data pipeline must include mechanisms for:

- Validation: Routine checks to confirm that data meets specific criteria and quality thresholds.

- Cleansing: Processes to refine and correct data, removing inaccuracies and duplications.

For example, implementing an automated validation check can ensure incoming data adheres to predefined formats and value ranges.

Data Governance Frameworks

Data governance is a collection of practices and processes that enable organizations to manage their data assets effectively. A solid data governance framework establishes who is accountable for data assets and how those assets are used. Some key elements to consider are:

- Roles and Responsibilities: Clearly defining who manages and oversees data assets.

- Policies and Procedures: Documenting how data should be handled, sharing guidelines, and compliance mandates.

These components work together to support compliance with regulations like the GDPR for European data or HIPAA for health information in the United States.

Data governance ensures the strategic use of data in ways that are consistent with organizational goals and regulatory requirements. For instance, adherence to data governance best practices can significantly enhance the trustworthiness of your data analytics outcomes.

Organizations must also ensure that their data governance aligns with legal frameworks like the California Consumer Privacy Act (CCPA), which gives consumers rights over the data companies collect about them. A thorough understanding of these data regulatory requirements can inform your data governance policies to maintain compliance and avoid legal pitfalls.

Advanced Topics in Data Pipeline Architecture

Handling Large Volumes of Data

When dealing with large volumes of data, scalability becomes a critical aspect of your data pipeline architecture. Distributed computing frameworks like Apache Hadoop and Apache Spark allow for the processing of large datasets across clusters of computers using simple programming models.

It's essential to design your data pipeline to scale horizontally, ensuring it can handle an increase in data volume without a loss in performance. For instance, streaming data platforms like Apache Kafka are built to handle high throughput and low-latency processing for large data streams.

Complex Event Processing and Dependencies

Complex event processing (CEP) is essential when you aim to identify meaningful patterns and relationships from a series of data events. This involves tracking and analyzing data in real-time to identify opportunities or threats.

Managing dependencies in data processes is critical. It involves ensuring that the downstream tasks are executed in the precise order required by your business requirements. A tool like Apache Airflow allows you to define, schedule, and monitor workflows using Directed Acyclic Graphs (DAGs), where each node represents a task, and edges define dependencies.

Creating a responsive data pipeline architecture suited to your organization’s needs involves a deep understanding of the data at hand and the business contexts they serve. Advanced tools and frameworks are available to manage and process both large volumes and complex data efficiently while taking into account necessary dependencies to meet your business goals.

Cloud-based Data Pipelines

Leveraging Cloud Services

Using cloud services like Amazon S3 for storage, Microsoft Azure for computation, and SaaS platforms such as Snowflake for data warehousing, simplifies building a data pipeline. You can process vast amounts of data with Google BigQuery, and integrate various data sources and destinations effortlessly. These services often provide:

- Elasticity: Automatically scale resources up or down based on demand.

- High availability: Ensure your data is available when you need it with cloud-hosted solutions.

Cloud-native Tools and Services

Cloud-native tools are designed to maximize the advantages of the cloud computing delivery model. Services like Snowflake and platforms such as Google BigQuery harness cloud-native capabilities to provide:

- Seamless integration: Work fluidly with a variety of data formats and sources.

- Cost-effective solutions: Pay for only what you use and minimize upfront investments.

For instance, let's look at a simple workflow to understand how cloud-native tools are used:

- Data Collection: Data is gathered from various sources.

- Data Storage: The collected data is stored in Amazon S3.

- Data Processing: Leverage Azure functions to process the data.

- Data Analysis & Reporting: Utilize Google BigQuery for real-time analytics.

Here, each step uses a specific cloud-native service, showcasing the use of diverse cloud functionalities to execute distinct stages of the data pipeline.

Monitoring, Security, and Optimization

Implementing Monitoring and Alerting

Monitoring is the heartbeat of a data pipeline, signifying its health and performance. To set up efficient monitoring and alerting, you should:

- Choose Metrics Wisely: Determine which metrics are critical for your operation, such as throughput, latency, error rates, and system load.

- Establish Baselines: Identify what normal performance looks like to quickly recognize anomalies.

- Alerting Thresholds: Configure alerts to notify you when metrics deviate from these baselines to prevent data loss or pipeline failure.

For detailed insights on best practices, refer to Data Pipeline Monitoring.

Security Considerations

When it comes to security, you need to enforce protocols and tools that protect your data pipeline at every stage. Implement the following:

- User Access Control: Strictly manage who has access to the data pipeline and at what level.

- Data Encryption: Ensure all data in transit and at rest is encrypted.

- Regular Audits: Conduct scheduled audits to uncover any potential security issues.

End-to-End Data Pipeline Implementation

Implementing an end-to-end data pipeline is a comprehensive process that involves establishing a seamless flow from data collection to delivering actionable insights. This includes integrating various components, such as data extraction, transformation, and loading, into a cohesive system.

Full Lifecycle of a Data Pipeline

Initialization: First, you identify the data sources and establish mechanisms for data extraction. This could involve connecting to APIs, databases, or directly to a data repository.

ETL Process: Here's a typical flow:

- Extract: Retrieve data from the source.

- Transform: Convert data into a usable format, often applying filters and functions for cleaning and enrichment.

- Load: Store the transformed data into a data warehouse or database.

Maintenance & Monitoring: Continuous checks ensure data integrity and pipeline efficiency. You implement solutions for logging, error handling, and recovery processes.

Updates & Optimization: Based on performance analytics and evolving business goals, the pipeline is regularly optimized for speed, efficiency, and accuracy.

Decommissioning: When the pipeline is no longer required or is to be replaced, a systematic shutdown ensures that data and resources are appropriately handled.

Operationalizing Data Pipelines

Automated Workflows: The use of platforms like Azure Databricks facilitates the automation of data workflow processes, ensuring consistency and reliability.

Monitoring & Alerting: Tools are implemented for real-time monitoring, with alerts set up for failures or performance dips.

Version Control: You handle updates to pipeline code systematically, often through version control systems, allowing rollback to stable versions in case of issues.

Data Governance: Adherence to data compliance and governance policies is a necessity. This controls access and usage and ensures privacy standards are met.

Performance Metrics: Analyzing performance metrics to gauge pipeline health and make necessary enhancements is crucial for the pipeline's longevity.

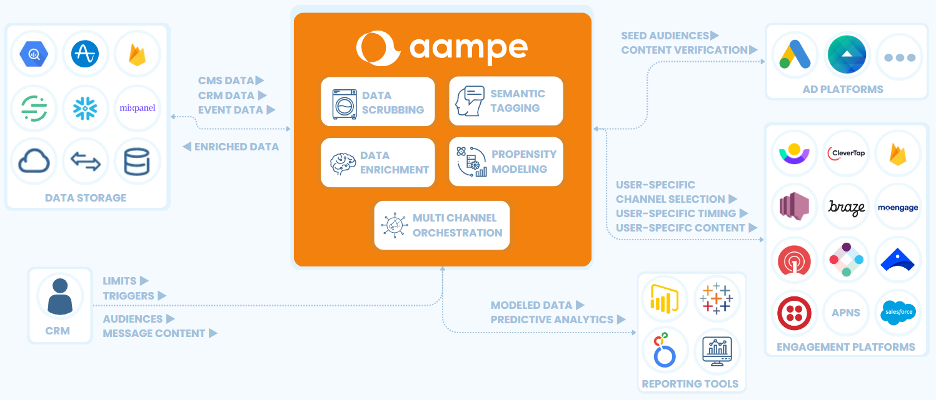

Looking to do more with your data?

Aampe helps teams use their data more effectively, turning vast volumes of unstructured data into effective multi-channel user engagement strategies. Click the big orange button below to learn more!