Data pipelines and ETL pipelines are foundational concepts in the world of data science, with distinct roles in the way information is gathered, processed, and stored.

Data pipelines consist of a series of steps that move data from one system to another. This pathway for data can include a variety of tasks beyond just transfer, such as cleansing, aggregating, and preparing the data for analysis. Different types of data pipelines—batch and real-time—service distinct needs, from processing hefty datasets at scheduled times to handling data streams instantaneously. To fully appreciate the intricacies and applications of data pipelines, you may refer to the ETL pipeline's role within data management.

ETL, which stands for Extract, Transform, Load, represents a specific type of data pipeline designed to perform three key operations. Data is first extracted from its source, then transformed to fit the requirements and structure of the target system, and finally loaded into a destination, such as a database or data warehouse. Although ETL is a data pipeline, not all data pipelines are ETL, as some may involve different processes or only part of the ETL sequence. The relationship between data pipelines and ETL pipelines is analogous to the broader category of vehicles and cars; all cars are vehicles, but vehicles encompass a wider range that includes bikes, trucks, and more. For a deeper dive into these processes and how they differ, consider examining a quick guide to ETL versus data pipelines.

Understanding Data Pipelines

In the realm of data management, data pipelines play a vital role in ensuring that information flows accurately and efficiently from its origination to its destination. This systematic approach to data transfer is essential for organizations looking to leverage their data for strategic decisions.

Definition and Purpose

A data pipeline is a set of processes enabling the movement and transformation of data from various sources to a destination for storage and analysis. Its purpose is to facilitate data integration and ensure data quality through a streamlined, automated workflow. Data pipelines are crucial for companies to aggregate and organize large volumes of data, leading to improved data accessibility and reliability for data analysts and data engineers.

Components of a Data Pipeline

The architecture of a data pipeline typically consists of several key components:

- Data Sources: The starting points can include databases, APIs, file systems, or web services.

- Data Processing: This involves the transformation of raw data into a more useful format through filtering, aggregation, or summarization.

- Data Transportation: Moves the processed data to its final destination, commonly using streaming data technologies for real-time or batch methods for larger, less frequent transfers.

- Storage: Finalized data is then stored in databases, data lakes, or warehouses.

- Monitoring & Management: These systems ensure the health and performance of the data pipeline and maintain high data quality.

By clearly understanding these components, you're better equipped to discern how data pipelines fit into your data infrastructure.

Batch VS Real-Time Data Processing

Batch processing handles large volumes of data at scheduled intervals. It is ideal for scenarios where it's not critical to have immediate data access, and it often involves significant data transformation tasks. Contrastingly, real-time processing deals with streaming data, analyzing and transferring it nearly instantaneously, essential for use cases like financial transactions or social media feeds where immediate insights are paramount.

Your choice between batch or real-time processing should align with your specific data management needs and the capabilities of your infrastructure.

Exploring ETL Pipelines

Before diving deeper, it's essential to understand that ETL pipelines are critical frameworks in data warehousing, crucial for extracting, transforming, and loading data effectively.

ETL Defined

ETL stands for Extract, Transform, Load—a sequence of processes where data is extracted from various data sources, transformed into a format suitable for analysis and reporting, and then loaded into a final target destination, typically a data warehouse. The primary purpose of an ETL pipeline is to consolidate the data into a single, cohesive structure.

Steps in the ETL Process

The ETL process can be broken down into three key stages:

- Extraction: The extraction phase involves collecting and combining data from multiple sources. Its fundamental goal is to retrieve all required data efficiently while minimizing the impact on source systems.

- Transformation: This is the core stage where data undergoes cleansing, deduping, validation, and various data transformations to ensure it meets the desired quality and structure. The transformations may include:

- Formatting changes

- Data cleansing

- Data enhancements

- Loading: The final step involves moving the now-transformed data into the target data warehouse or storage system. Loading can be done in batches or in real-time, with the aim of optimizing for quick data retrieval.

ETL Tools and Technologies

Various ETL tools assist in automating the ETL process. Popular tools include Informatica, Microsoft SQL Server Integration Services (SSIS), and Talend, which provide a mix of graphical interfaces and coding to define ETL logic. These technologies can help manage data movement and ensure data consistency and reliability in data storage.

- Automation features: Essential for scheduling and monitoring ETL processes.

- Connectivity: To handle diverse data sources and targets.

- Data cleansing capabilities: An integral part of quality data transformation.

The selection of an ETL tool is crucial for the overall efficiency of data warehousing and should be aligned with the specific needs of the project, such as the amount of data, the complexity of data transformations, and the frequency of data loading.

Comparing Data and ETL Pipelines

When exploring the realms of data management, it's essential to understand the intricacies of data and ETL pipelines, which serve as vital frameworks for decision-making processes within businesses.

Similarities and Differences

Data pipelines encapsulate the journey of data from origin to destination, inclusively handling the various stages of transport and optional transformation. Conversely, ETL pipelines—a subset of data pipelines—involve a specific sequence of operations: extracting data from data sources, transforming it to align with business needs or to enhance data quality, and loading it into a database, data lake, or warehouse.

Use Cases and Scenarios

Data pipelines are optimal for scenarios requiring high scalability and real-time data feeds, contributing significantly to business intelligence and reporting activities. They thrive on versatility, capable of serving both analytical and operational purposes without necessarily altering the data. Examples of data pipeline workloads include consolidating user activity logs for website analytics or streaming sensor data for real-time monitoring.

In comparison, ETL pipelines are ideal when data must be cleaned, enriched, or aggregated before analysis to ensure integrity. This is especially crucial for comprehensive reporting and for powering complex business analysis with clean and structured data. An ETL pipeline might transform sales data from multiple outlets into a unified format to feed into a business intelligence tool for performance analysis.

Choosing Between Data Pipeline and ETL

Your choice between a data pipeline and an ETL pipeline hinges on your specific needs regarding data quality, complexity, and reporting requirements. If your focus is on speed and raw data integration from various sources with minimal transformation, a data pipeline could suit your needs. However, if your data requires rigorous transformation and cleaning to ensure accuracy for strategic decision-making, an ETL pipeline is likely the more fitting solution.

Employing an ETL pipeline can introduce layers of complexity but is often justified by the need for high-quality data in scenarios where accuracy is paramount. On the flip side, leveraging a data pipeline might be more straightforward and flexible, especially when dealing with large volumes of data in various formats.

Remember, the integration of robust data management practices is critical to the success of business processes, and choosing the right framework is a cornerstone of this strategy.

Advanced Concepts in Data Handling

In navigating the complex terrain of data management, it's crucial to understand the infrastructure and processes that allow for efficient data storage, transformation, and compliance. This includes distinguishing between data lakes and warehouses, scaling data transformation, and maintaining governance and compliance.

Data Lakes VS Warehouses

Data Lake: A vast pool of raw data, the purpose of which is not defined until the data is needed. Data lakes support machine learning and real-time analytics because they store data in their native format and can handle structured, semi-structured, and unstructured data.

- Architecture: Typically cloud-native; examples include Amazon S3 or Azure Data Lake Storage.

Data Warehouse: A repository for structured, filtered data that has already been processed for a specific purpose. Data warehouses are primed for data visualizations and are traditionally used by data scientists.

- Systems: Include Snowflake, Amazon Redshift, and Google BigQuery.

- Data Quality: More easily controlled due to its structured nature.

Data Transformation at Scale

ELT (Extract, Load, Transform) processes have modernized to handle massive volumes of data effectively. The transformation happens within the data storage system, which allows for:

- Scalability: Systems can grow with data demands, utilizing cloud resources like Google BigQuery.

- Performance: Faster querying times suitable for large-scale data visualizations.

Governance and Compliance

To secure data integrity and comply with standards like HIPAA, you must enforce strict governance and compliance measures:

- Data Quality: Ensure accuracy, consistency, and context of data.

- Regulatory Compliance: Adhere to laws and regulations to protect sensitive information.

Strategies:

- Implement role-based access control (RBAC) to limit access to sensitive data.

- Maintain audit logs and monitor access patterns.

Proper handling of advanced concepts in data management accelerates your ability to glean insights and drive decision-making while maintaining the integrity and security of your data systems.

Technological Integration and Adaptation

In the evolving landscape of data management, it is crucial for you to understand how technological integration and adaptation can streamline your data pipeline operations. Leveraging modern infrastructure and practices ensures that application data flows seamlessly through systems like CRM and ERP while maintaining data integrity and quality.

Data Pipeline Infrastructure

Modern data pipelines rely on a robust data infrastructure that efficiently supports data ingestion, transformation, and storage processes. Open-source platforms and tools are often integral to these pipelines, providing a foundation for processes such as data cleansing and real-time processing. For example, Apache Kafka is a widely used open-source tool for real-time data handling.

Integration with Cloud Platforms

A cloud data warehouse is a pivotal component of contemporary data infrastructures. Integration with cloud platforms like Amazon Web Services (AWS) allows for scalable data ingestion and storage. For instance, the AWS Data Pipeline service is a web service designed to facilitate the reliable processing and movement of data between AWS compute and storage services and on-premises data sources.

Adopting Modern Data Pipeline Practices

The adoption of modern data pipeline practices involves leveraging APIs for integration and implementing cloud data solutions for enhanced scalability and performance. Industries like eCommerce & retail benefit significantly from modern data pipelines that support real-time processes and ensure data reliability and compliance.

Your ability to adapt to evolving technologies is imperative for maintaining a competitive edge and ensuring data accuracy and actionable insights.

Real-World Applications and Case Studies

In this section, we explore how data pipelines and ETL processes are practically applied across different industries, demonstrating their impact on market analytics, healthcare, and machine learning.

Market Analytics and E-commerce

In the e-commerce sector, data pipelines harness data from various sources, including social media and e-commerce platforms, to deliver real-time analytics. These pipelines enable businesses to capture customer interactions, integrate them with CRM systems, and utilize advanced marketing tools to drive decision-making processes. For instance, a data pipeline analyzes social media trends and website traffic patterns to tailor marketing campaigns that align with consumer behavior.

Machine Learning and Predictive Modeling

For machine learning applications, data pipelines play a crucial role in feeding real-time data for predictive modeling. Companies leverage these pipelines to process data continuously, enabling algorithms to identify trends and make predictions for use cases like inventory management or recommendation systems. Moreover, businesses use ETL processes to prepare and cleanse data, which is critical for training accuracy machine learning models. Predictive Modeling relies on the high-quality, processed data that these technologies ensure.

Remember, the proper application of data pipelines and ETL processes is paramount to harnessing the full potential of data in informing business practices and future strategies.

Challenges and Best Practices

In navigating the complexities of ETL pipelines and data pipelines, you'll encounter key challenges and identify best practices that are vital for successful implementation and operation.

Handling Data at Scale

When dealing with large volumes of data, ensuring efficiency and accuracy can be taxing. Your ETL processes should be scalable, able to handle increases in data volume without degradation in performance. One best practice is to employ parallel processing where possible, splitting tasks across multiple servers or processors to speed up throughput.

Ensuring Data Security and Privacy

Security and privacy are non-negotiable when it comes to data management. You must adhere to regulations like GDPR and HIPAA, implementing robust encryption and access controls. Regularly updated security protocols and comprehensive data governance policies are crucial for maintaining trust and compliance.

Optimization Techniques

To fine-tune your data or ETL pipelines, introduce optimization techniques such as caching frequently accessed data and employing data deduplication methods to reduce data redundancy. These steps can significantly enhance the speed and efficiency of your data operations. Insights from Understanding Data Pipeline vs. ETL highlight the importance of streamlining data processing for achieving optimal performance.

Looking to do more with your data?

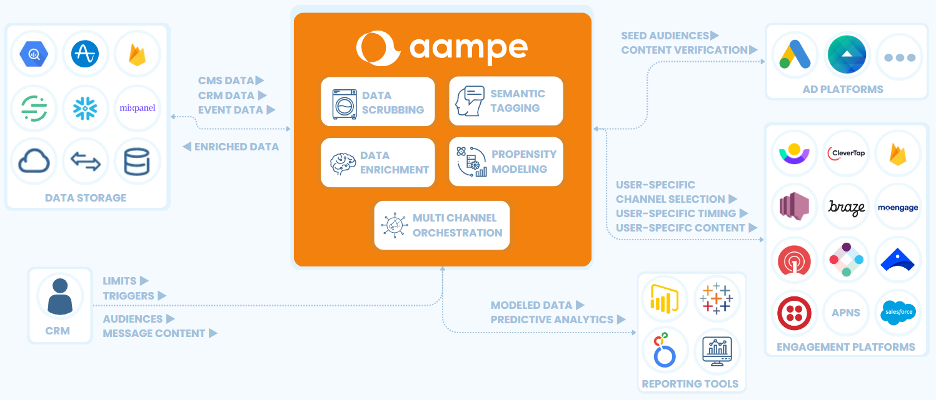

Aampe helps teams use their data more effectively, turning vast volumes of unstructured data into effective multi-channel user engagement strategies. Click the big orange button below to learn more!